8 enablers identified by McKinsey to deliver value from your data at scale and how data portals can help

Why do many companies fail to effectively democratize data access? According to new insights from McKinsey it could be due to an overly narrow focus on use cases at the expense of building a scalable data value chain. Our blog explains the key components you need to industrialize data use and unlock greater value.

Organizations recognize the importance of using data to increase efficiency, improve innovation and underpin better decision-making. However, the data projects they launch often fail to deliver value at scale. New insights from consultants McKinsey explain why this is – and outline the technical enablers to focus on to industrialize data use across the organization.

The good news is that implementing data portals helps meet this requirement to deliver next generation use cases and to unlock the underlying, wider value from your data.

The need for a wider focus in data projects

The traditional approach to better exploiting data is to focus on specific use cases. As McKinsey points out, this can be the best way to get projects signed-off and buy-in from business departments and users. It also means that organizations can target pressing needs and opportunities more quickly. However, it can inadvertently encourage a narrow focus on individual projects, at the expense of creating a wider, foundational infrastructure for data sharing and reuse. This leads to a lack of synergies and means that every new project has to reinvent the wheel, starting from scratch instead of benefiting from what has previously been implemented in three key areas:

- A lack of scalable infrastructure means that the tech/data stack cannot accommodate new projects without requiring substantial upgrades and investment, while adding to maintenance overheads.

- Poor automation of processes, such as data formatting and standardization, introduces the risk of manual errors creeping in and adds to workloads for data teams.

- No company-wide governance and monitoring solutions. Ad-hoc or departmental data governance projects lead to a lack of standardization and introduce the real threat of security and confidentiality breaches that risk large-scale fines and reputational damage.

Altogether these factors increase costs, introduce technical debt/duplication and create unforeseen risks for the organization. Essentially they prevent the industrialization of value from data and silo it within individual, small-scale projects.

8 enablers to deliver wider data projects

Putting in place the essential building blocks

In contrast to focusing on specific use cases, taking a wider view, and implementing a strong foundation for data sharing delivers significant benefits in terms of cost and time. McKinsey states that “the right implementation of investments across the data value chain could reduce execution time for use cases by three to six months and the total cost of data ownership by 10 to 20 percent.”

So what are these building blocks? McKinsey highlights eight enablers that deliver wider data project success, split between foundational capabilities and next-generation use cases:

Foundational capabilities to create value:

1. A service-oriented organization and platform

Data needs to be easily available to everyone across the organization, and within a company’s wider ecosystem. This encourages people to experiment with data and create their own use cases without needing to launch their own projects. A key part of this service-oriented organization is a self-service data portal that makes data assets easily findable and accessible to all. It should be intuitive, easy to use and link data producers with consumers, while including full management and monitoring to show which data assets are being used where and the value they are delivering.

2. Next-generation data quality optimization

Ensuring data quality is key to it being trusted and consumed at scale. Automating data quality checks and applying processors to standardize formats saves time and guarantees quality. This leads to greater usage and confidence from users.

3. Data governance by design

Ensuring that data is collected, processed and shared in accordance with corporate data governance rules is key to meeting compliance requirements and protecting confidential information. Data governance processes need to be at the heart of data sharing, not just around the data itself, but also by providing rule-based access to specific datasets, based on factors such as role and seniority.

4. A resilient architecture

Data management architectures have to be able to cover the full data lifecycle, including managing master data, customer relationships, and reference data. They need to be able to scale securely as usage grows. Adopting a cloud-based architecture delivers this flexibility and resilience across an organization’s global operations.

Requirement to deliver next-generation use cases:

1. Unified data pools

Bringing together data, such as through a data mesh architecture, makes it more accessible for the entire organization. It encourages experimentation and the faster development of standardized data products or use cases, all built on the same flexible data storage architecture.

2. Standardized integration with third-party data providers

Platforms need to connect to all internal sources of data, but also act as a hub for data from third-party providers and public data sources. All of this data should be available through the same data portal, alongside internal data, enabling it to be easily accessed and consumed. This maximizes its value and enables greater collaboration across ecosystems.

3. Dashboards and data democratization

People want to access data assets in different ways. While some will want raw datasets, others will find it easier and more intuitive to consume and reuse data assets such as visualizations, maps, stories and dashboards. Making data available in a variety of ways drives data democratization, as every employee is able to find the right data assets to help them in their roles.

4. Streamlined management and operations

Automating data management, such as through AI tools, reduces the resources needed to support data users. This frees up time for data teams to better understand user needs and to reach out across the organization to find new data assets that can be shared through data portals.

How do data portals help deliver usage at scale?

As McKinsey’s analysis shows, focusing solely on individual use cases at the start of a data project risks embedding a too-narrow approach that does not scale to meet the needs of the wider organization.

Instead, creating and scaling data portals as part of your approach allows you to meet the essential requirement of delivering foundational value data and providing the enablers to deliver next-generation use cases. It makes data assets accessible throughout the organization, meaning that for CDOs, CIOs and data managers, the data portal is a key solution to enable success. As a one-stop-shop it provides self-service access to data assets for the maximum number of stakeholders, whether in-house or with external public or private audiences.

Perfectly integrated with your data management stack, data portals provide the ability for data managers to improve administration, while also creating access to data and providing a seamless experience for portal users.

Putting in place a full data value chain, centered on an intuitive, self-service data portal that is available to all, therefore helps scale data usage and the benefits that it delivers across the business, delivering data democratization and industrializing data use.

To be truly useful, energy data needs to be comprehensive and easily understandable by all - read how Agence ORE is delivering on this need through its unified energy data portal.

In today’s digital-first world, data sharing and use is essential to effective local government operations. Based on our recent webinar, Opendatasoft customers the City of Kingston and the Town of Cary explain how data portals are helping them to deliver on the needs of their citizens and employees.

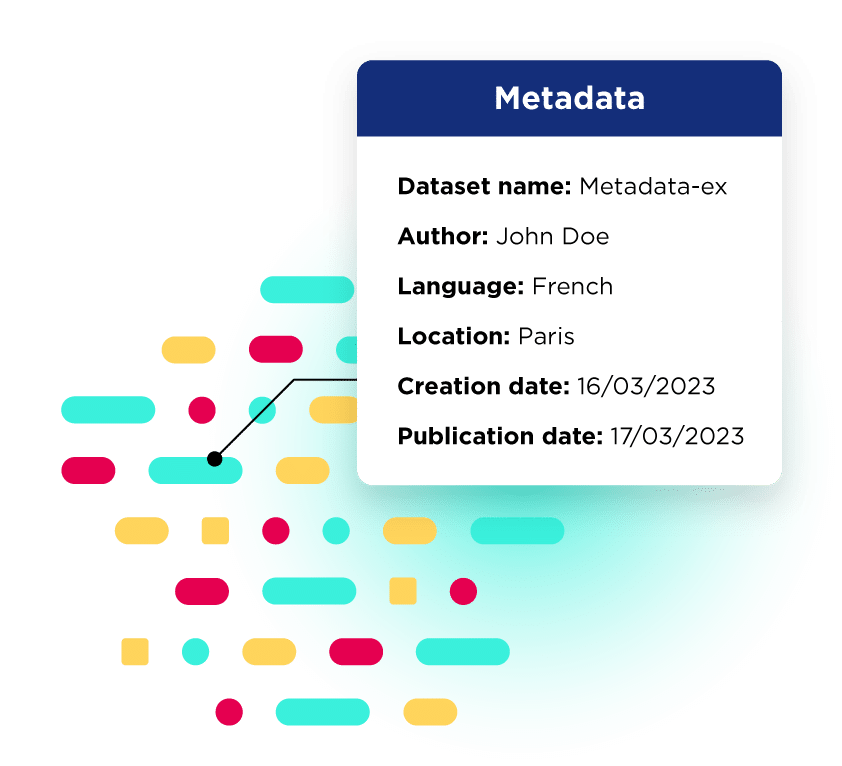

Learn more about the metadata templates available on our data portal solution and how they help to improve data quality and compliance, increase efficiency and save time on a daily basis.

To be truly useful, energy data needs to be comprehensive and easily understandable by all - read how Agence ORE is delivering on this need through its unified energy data portal.

In today’s digital-first world, data sharing and use is essential to effective local government operations. Based on our recent webinar, Opendatasoft customers the City of Kingston and the Town of Cary explain how data portals are helping them to deliver on the needs of their citizens and employees.

Learn more about the metadata templates available on our data portal solution and how they help to improve data quality and compliance, increase efficiency and save time on a daily basis.