Opendatasoft Hackathon: exploring the potential of Artificial Intelligence in data management tools

What benefits could AI bring to users of SaaS-based data management software? To find out, Opendatasoft organized an internal hackathon that brought together over 30 developers in order to test ideas for improving our platform using AI. Read all about the results of these two days of intense work in this article.

Artificial Intelligence (AI) and Machine Learning (ML) are becoming part of many technologies, revolutionizing existing processes in terms of efficiency, speed and accuracy.

What benefits could AI bring to users of SaaS-based data management software?

To find out, Opendatasoft organized an internal hackathon that brought together over 30 developers in order to test ideas for improving our platform using AI. Read all about the results of these two days of intense work in this article.

Artificial Intelligence: a revolution for SaaS data management platforms?

When we talk about AI and ML, we often think of the huge volumes of data that feed these models.

But these techniques can also help automate many manual, repetitive processes, enabling employees to focus on higher value-added tasks. Increasingly, organizations are using AI and ML to improve their processes and become more efficient..

Every organization has a different level of AI maturity, meaning democratizing these technologies and increasing their adoption is a major challenge.

A hackathon to explore the benefits of Artificial Intelligence for the Opendatasoft platform

Opendatasoft’s SaaS-based data portal solution plays a central role in organizations’ data strategies. That’s why our teams are particularly interested in the capabilities of AI and ML to help accelerate our customers’ work to enhance and democratize their data.

To underline this commitment, we organized a hackathon dedicated to AI. The aim was to work in a very short timeframe on one or more new features that could enhance our platform.

On July 4-5, 2023, 30+ developers from our Infrastructure, Security, Engineering, and Customer Services teams got together to test more than 10 ideas for enhancing our solution with AI.

Over the two days we achieved several objectives:

Inspiring Product Managers

The first aim of the hackathon was to inspire our Product Managers in charge of the evolution of Opendatasoft’s solutions and products.

As AI is a complex and still developing field, it was important to test several hypotheses to identify opportunities for improvement. These tests highlighted several challenges for our technical teams:

- The difficulty of developing certain functionalities

- The security risks associated with AI

- The relevance or added value of specific features for our users.

At the end of the hackathon, participants presented the areas they had explored and the lessons learned to the Product Managers.

Artificial Intelligence wasn't part of our "toolbox" when we were designing a feature. Thanks to this hackathon, we were able to use AI to improve a number of functionalities in order to validate or invalidate certain hypotheses. We can already foresee the benefits this approach could bring to our users.

A better understanding of Artificial Intelligence issues

At Opendatasoft, we share the conviction that AI can automate a number of tasks and processes for our customers. But it also raises many questions linked to security and accessing the required skills.

This hackathon was therefore an opportunity to deepen our teams’ knowledge of the subject, in particular, through the use of ready-to-use APIs (such as those from OpenAI), local Large Language Models (LLMs) or classic ML libraries (such as Tensorflow).

Some team members had already worked on Artificial Intelligences in previous roles, but we had never initiated a real in-house project on the subject. This hackathon enabled us to experiment with AI technologies and develop expertise in their use as a tool for future product development.

Building team skills and cohesion

Last but not least, the hackathon was designed to improve the skills of our development teams. Normal teams were completely mixed up, providing the opportunity for employees to work intensively with new colleagues, triggering fresh new thinking.

As a result, the teams were able to exchange best practice and help each other tackle the thorny issue of Artificial Intelligence.

The scope of functions offered by the Opendatasoft platform is very broad. Our busy day-to-day development schedule sometimes leaves little room for experimentation and inter-team exchanges. The hackathon overcomes this problem: for two days, teams mix and experiment with the latest technology trends - in this case AI. The results are there for all to see: new links are forged between colleagues, and innovative new projects are born!

How can AI improve the Opendatasoft platform?

In the run-up to the hackathon, our teams gathered over 30 ideas for improvements to be tested. These not only came from the Technical and Product teams, but also from discussions with our customers.

Each participant was free to choose the topic they wished to work on during the hackathon, and the groups comprised between 2 and 6 people. Several projects were selected:

- Automatically pre-generate a complete schema configuration of a dataset (types, facets) to save publication managers considerable time and improve the quality of published data.

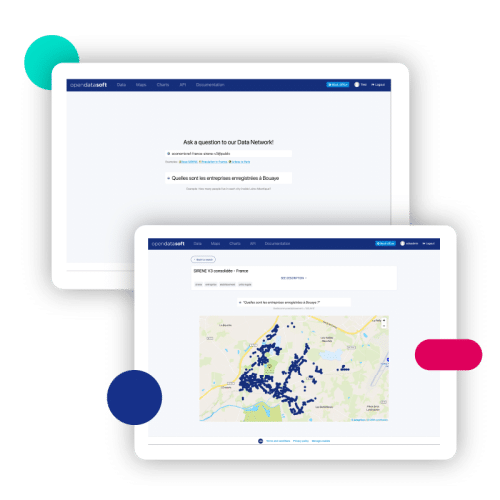

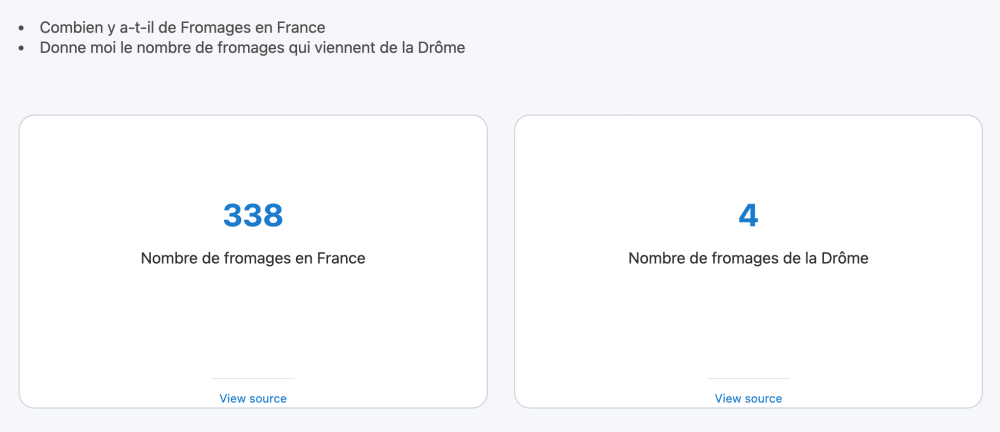

- Automatically translate natural language queries into Explore API requests, enabling data consumers to query data by asking simple questions, without having to understand the ODSQL query language.

Add a dataset recommendation module based on the semantic similarities of a portal’s dataset metadata.

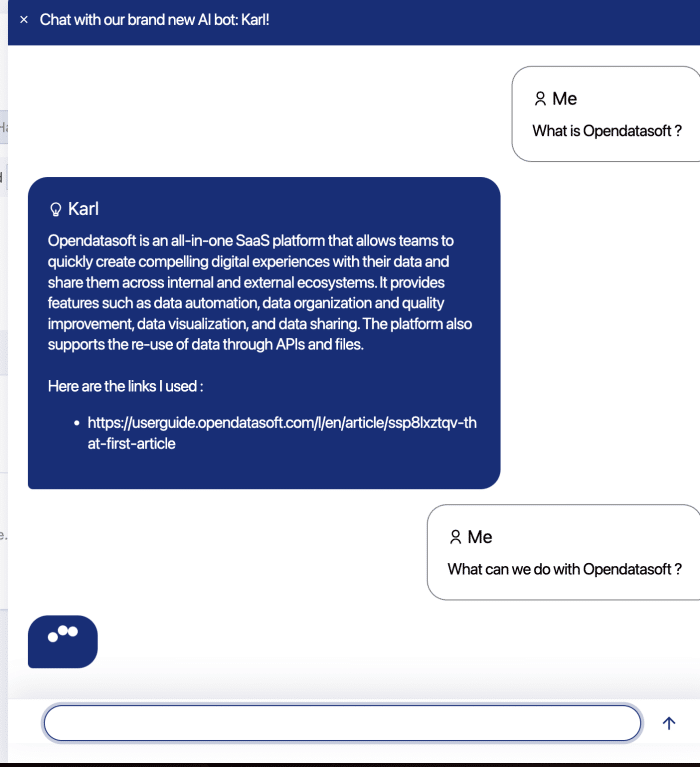

Allow Opendatasoft users to ask specific questions about the product and its use, using a local extended language model trained with our various documentation.

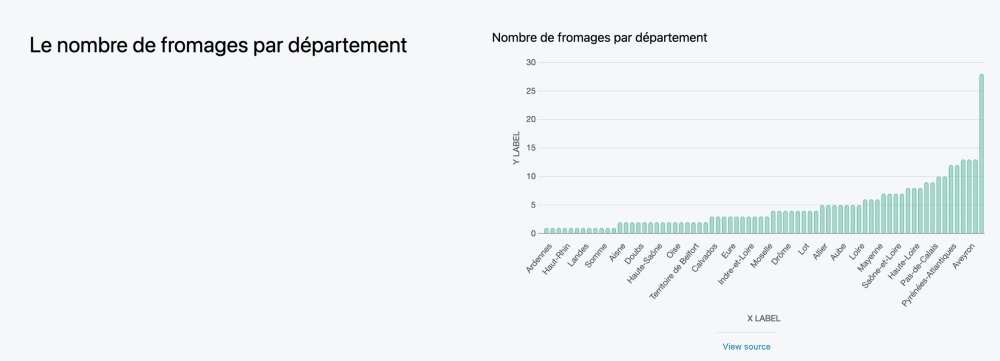

Automatically generate data visualization blocks in the Opendatasoft Studio from a natural language request. For example: “I want to display the dataset of notable trees on a map of Paris to see how many trees there are in each district”.

Improving search on data portals by using Elasticsearch’s vector search capabilities and improving search on metadata and datasets.

For each project, each different team proposed research approaches and then demonstrated the results to the rest of the participants.

What lessons were learned?

In addition to the specific lessons learned about our platform, we can draw some overall conclusions about the use of Artificial Intelligence within the data management industry:

- Artificial Intelligence opens up new perspectives: AI delivers impressive results in very short timescales. Our teams were able to design simple interfaces that help democratize data. This is a very encouraging initial conclusion for the next product innovations we hope to offer.

- AI is not always reliable: while 90% of the tests carried out worked perfectly, 10% did not meet the initial objective. What’s more, some of the tests carried out were inconclusive because of insufficient data.

- Artificial Intelligence comes with its own set of constraints: from the business model to questions of confidentiality, security and data sovereignty. It’s possible to move very quickly to create new functionality, but to meet our customers’ expectations, it will take longer to develop models that will give us total control over data security and quality.

The teams outdid themselves during these two days, and we were very pleasantly surprised by the discoveries we made! Of course, AI remains a complex subject to master, requiring a long-term investment. But it's well worth the effort, and the potential of this approach is huge, whether for improving existing functionalities or developing disruptive new ones.