The hidden costs of open source data projects

When planning your data project it can be tempting to base it around open source technology. However, this can generate additional costs and require greater resources to implement and manage, as our blog explains.

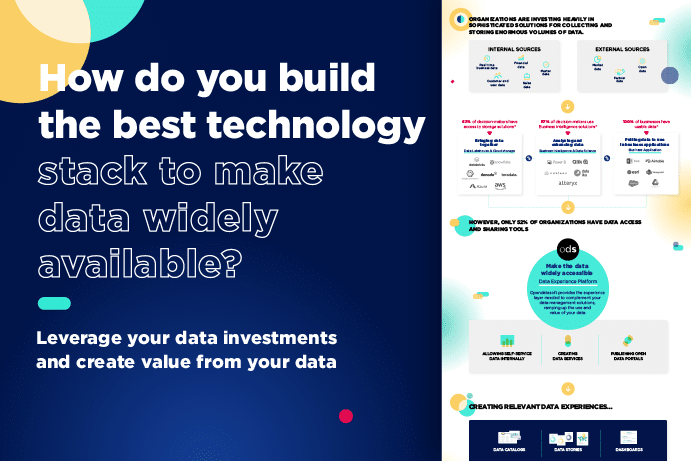

Providing employees, partners and citizens with access to understandable data helps increase productivity, collaboration and transparency. Organizations are therefore increasingly looking to share their data internally and externally through data marketplaces and portals.

However, many existing tech stacks lack the vital data experience layer to free their data and make it available to all. That’s why organizations in both the public and private sector are implementing new solutions to democratize their data and enable it to be used by the maximum number of people, without requiring them to learn expert skills.

The 3 options for sharing data more widely

As with any enterprise software, organizations essentially have three options when it comes to creating a data portal or marketplace. They can:

- Develop it internally themselves from scratch

- Implement an open source solution

- Buy a solution from a third-party provider

In-house development

Taking the first option, developing your own solution does mean it should be a close fit for your requirements and ways of working. However, coding your own software is resource-intensive, adding to the workload on your IT team. Development may be impacted by staff being diverted to other, more urgent projects, and while your team are experts on your business, they are unlikely to have created similar solutions before. That means they will be on a learning curve, which again slows development. There’s a big risk that they’ll fall foul of common pitfalls due to their lack of experience in this area. All of this adds considerably to cost and may mean solutions eventually don’t meet business or user requirements.

The new solution will then need to be supported and developed on an ongoing basis. This adds to costs and pressures on the IT team.

Consequently, most organizations, unless they are technology companies or have a large number of developers on staff will choose to implement an external solution – either from an open source project or from a third-party provider.

Open source solutions

Developed by the programming community, open source solutions are available for any organization to implement and use for free. Particularly for organizations looking for a cost-effective way to democratize their data or launch open data projects, open source can appear extremely inviting. However, while open source software itself is free there are a number of issues and hidden costs that need to be factored into your planning and business case:

Implementation costs

Getting your open source software deployed and integrated with your wider tech stack can be complex. It can require you to either learn new skills or to employ programmers with specialist knowledge to get your implementation up and running. This adds to costs, particularly if these programming resources are scarce. You may also need to create bespoke interfaces to connect to your existing software or data sources if they are not immediately available within the open solution you choose.

Support costs

Keeping your solution updated requires ongoing resources. You’ll need to add this to your IT team’s workload or again outsource to a specialist, adding additional work or cost to your project. Depending on the maturity of the open source project, and the level of activity within its community, you may need to update to new versions relatively frequently, adding to overheads.

Security

Open source code is freely available and may become the target of hackers. While it is the responsibility of the community to spot and fix security vulnerabilities this may not happen due to a lack of skills and resources, the complexity of open source projects and their reliance on third-party libraries and modules. These can introduce vulnerabilities if those libraries have security issues.

Scalability

Many open source projects began as academic or community projects. First users may have therefore had modest requirements when it comes to scalability and the amount of data, users and sources they needed to handle. That means they can be unproven at dealing with large volumes of data, making you a guinea pig to test their scalability and throughput. That can put constraints on the performance you actually receive in real-world operations, hurting the user experience and adoption.

Future development and customization

How organizations want to use data is changing and accelerating. Therefore, you need a future-proofed platform that can be customized to your requirements, now and in the future. That requires strong support and comprehensive documentation that you can understand and act on. Not all open source projects have this, meaning you are unable to innovate or implement new features within your solution. This risks a mismatch between your needs and what the software can deliver.

Lack of value creation

Open source projects are used by a wide variety of organizations, in many different ways. That means it may be difficult to learn from companies or groups that are using the software in a radically different way to your business. And, while there are forums and meetups for the most popular projects, sharing of relevant best practice and potential use cases may be lacking. This means you may be on your own when it comes to developing or extending the solution, particularly if you are using it differently to others.

All of these factors mean that you are likely to have to invest additional time, resource and effort in implementing and managing open source solutions. This means that their costs will increase, making them far from a free option. Equally, you are unlikely to create compelling use cases around the technology that deliver value, negating the potential benefits of data sharing.

Working with a third-party solution

Working with a specialist provider for your data sharing needs is therefore a more logical approach for many organizations. While there are costs involved in licencing the solution, you gain a range of benefits:

Ongoing support and security

Clearly vendors want to keep you as a customer. They therefore heavily invest their time in providing ongoing support to ensure your implementation remains up-to-date and runs smoothly. They work with specialists (such as cloud providers and security companies) to guarantee uptime and protect your data, and take responsibility for updates, patches and ensuring the system remains secure – after all, their business survival depends on it.-

Specialist knowledge

Picking a specialist provider with a deep understanding of the data market and a large client base also delivers multiple advantages. It is likely they have been involved in similar implementations to yours, and can share best practice and ideas from other customers with you. This knowledge transfer helps bring your team up to speed quickly and encourages you to develop your implementation in new ways.

Cost-effective implementations

The level of support and help you’ll receive with your implementation should mean you don’t require specialist outside contractors. This brings down project costs and derisks deployments. Look for a provider that offers comprehensive documentation for their solution so that you can easily add features yourself by following simple instructions.

Ongoing development roadmap

Vendors are continually developing their software to add new features in line with user needs. They normally have greater specialist resources and time to do this compared to open source communities, meaning that you will have access to the latest features, faster.

A robust, interoperable platform

Open source software may not have been deployed at scale, leading to issues when implemented within large organizations. Equally, they may not integrate with your other business systems, particularly if they are less commonly deployed. By contrast vendors normally design their architectures and platforms to be extremely robust and scalable, delivering the features and interoperability that their customers require. For example, Opendatasoft automatically provides APIs for every dataset on the platform, enabling seamless integration and sharing – something that other solutions do not offer.

Access to a unique community

Clearly open source tools have a community around them, developing the software and sharing technical best practice. Good vendors replicate and extend this community approach, providing access to information, best practices and other customers to learn from. For example, Opendatasoft’s Data hub brings together over 30,000 datasets that are freely available to share and combine with your own. This openness helps share real-world, relevant experiences to generate new ideas and use cases that directly increase the value you gain from your implementation.

Data sharing is business critical. Whether it is creating an open data portal, an internal self-service data marketplace or building a community for partners, your project has the potential to transform productivity, transparency, efficiency and decision-making. You therefore need a solution that scales to meet your needs, connects to your existing and future systems and delivers a high quality experience to your users. This means that going down the build your own or open source pathways can be a false economy, leading to increased costs and less comprehensive functionality, hampering your move to data democratization.

At Opendatasoft we’ve helped our customers build more than 2,000 data projects globally across all industries. Using our all-in-one platform enables them to create and share new data experiences that are more searchable, more relevant and more memorable. Our powerful technology is backed by comprehensive support, deep understanding of data, a commitment to innovation and a proactive team of Customer Success Managers to ensure you maximize the benefits from your investment. Contact us to find out more.