Everything You Need to Know about Using APIs with Opendatasoft

In this article, we will present some of the differentiating aspects of the Opendatasoft API feature and how it complies with the recommendations by 18F.

APIs have become over the past few years an essential aspect of any open data platform. They are essential if you are looking to make your data reusable. However, not all APIs are created equal. In this article, we will present some of the differentiating aspects of the Opendatasoft API feature and how it complies with the recommendations by 18F.

API Anatomy

The best way to explain how to use APIs is to study the breakdown of the pieces used to make an API query. We will also include some protips from the US Digital Service folks at 18F. I like 18F because they are good at what they do and also fairly transparent about how they evaluate things like APIs. Below is a simple list of the anatomical parts of an API. We will use this list to walk through using a URL, read a JSON file, and explore and evaluate a few APIs using the 18F evaluation methodology:

- Protocol: HTTPS:// this is the standard by which browsers access data;

- State: is the API RESTFUL? Does it allow to create, append, and delete operations?

- URLs: Uniform Resource Locators are used by browsers and APIs to connect with data;

- JSON: JavaScript Object Notation: this is the format of the data object being returned from the URL using HTTPS://;

- API Standards from the US Digital Service to make things easier for everyone.

URLs are just the https:// string in the address bar of your browser. These are the strings that will interact with the API and return data. The data that is returned will usually be something called a JSON file. JSON is actually a subset of the JavaScript language. It is ideal as a data exchange format because it is easy for humans to read and easy for machines to parse. In fact, the US Digital service says about JSON:

“Just use JSON. JSON is an excellent, widely supported transport format, suitable for many web APIs.”

Supporting JSON and only JSON is a practical default for APIs, and generally reduces complexity for both the API provider and consumer. We will look at 18F guidelines around JSON a bit later in this article.

The US Federal Digital Service (18F), the private sector (including Opendatasoft) and agencies like the General Services Administration within the Federal Government have strongly advocated common approaches to defining APIs and schema. Kin Lane, API Evangelist, had this to say:

“The last administration worked hard to put forth API design guidelines, something that has been continued by USDS and 18F, but this needs to continue in practice. You can see an example of this in action over at the GSA with their prototype City Pairs API–which is a working example of a well designed API, portal, and documentation that any agency can fork, and reverse engineer their own design compliant API.”

The current (as of July 28th, 2017) administration has taken most of the APIs offline. That does not mean that 18F does not still have influence. Much of the work done by OpenAPI and others is remarkably similar to the work done by 18F. The goal to standardize and reuse APIs through common frameworks is here to stay.

Perhaps, more significantly, frameworks have evolved to make the construction of APIs more consistent and the documentation of the APIs easier to both develop and to use. Swagger, also known as the OpenAPI is one of the largest frameworks and is used by Opendatasoft. Frameworks and standards are important for re-use. API frameworks make creating and using APIs much easier. Think of frameworks like a library. A library stores items in a repeatable way using a standard information architecture. It doesn’t matter if you use a library in Boston or Raleigh: the books and materials are catalogued in the same way.

APIs used to be pretty difficult to figure out. But with the rise of standards, including universal design, documentation and the tools themselves are expected to be easy-to-use, re-use, and replicate. As an example, Kin Lane shared an API of APIs published by the GSA. At the time of his article there were over 400 APIs published.

How to Use URLs

Using URLs is how we get data back in a JSON format from APIs. When I said JSON was human readable I left out a detail. I use a browser extension to make JSON easier to read. The extension I prefer is JSON Formatter which can be downloaded in the Chrome Extension store. There are several JSON tools offered for Chrome.

Here is a URL where they can be accessed:

https://chrome.google.com/webstore/search/json%20viewer?hl=en.

URLs contain information and the strings can be used to return specific bits of data. The URL above goes to the Chrome Store and searches through its database of JSON tools. It is using a search function to make that query and then return that to me through the “?hl=en” part of the URL because my Chrome browser settings indicate that I am an English speaker. This seems fairly obvious but is important to know what parts do what functions.

URL Specifics and API Universal Design

I am going to start with a very basic query using the Opendatasoft API. It has all of the elements recommended by 18F’s API Standards.

- Clear and concise language to understand the API endpoint;

- Non-use of verbs as endpoint identifiers;

- Well-documented in plain, easy-to-understand language.

This is a discrete list and certainly there is more to universal design principles than three bullets. I am going to demonstrate Opendatasoft’s adherence to API Universal Design Standards with V1.

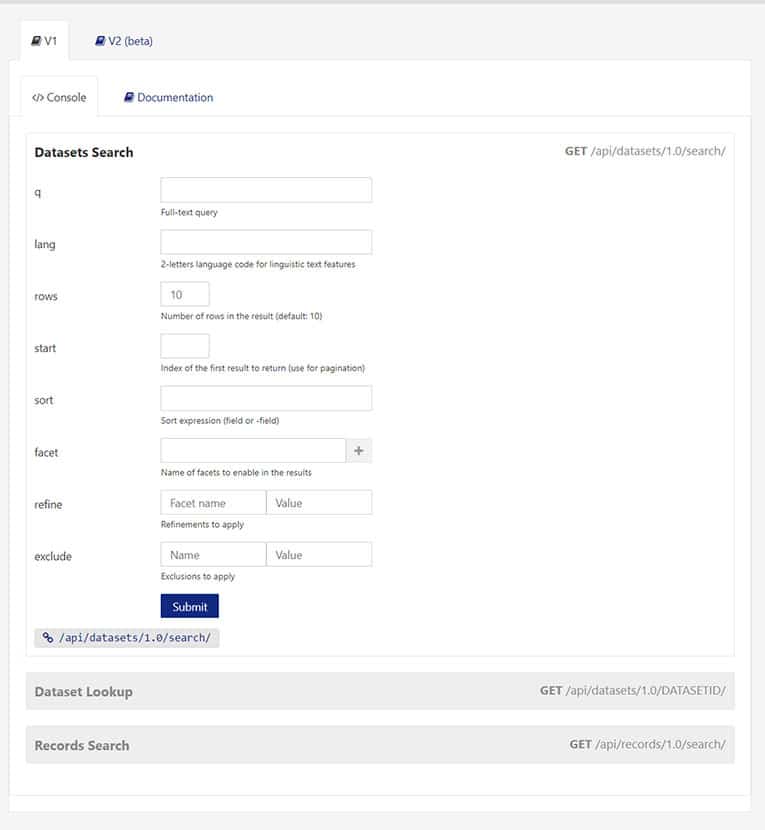

This is the V1 OpenDataSoft Console for the Town of Cary.

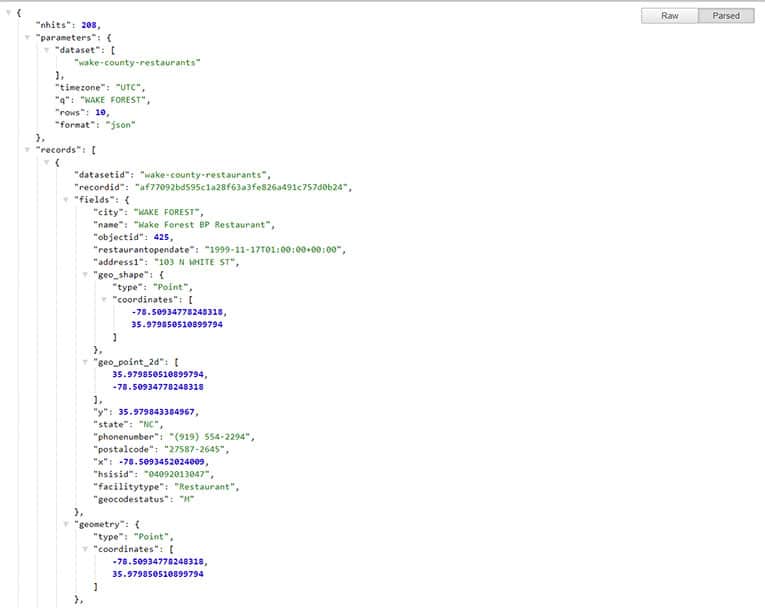

https://data.townofcary.org/api/records/1.0/search/?dataset=wake-county-restaurants&q=WAKE+FOREST

Below is a query using Opendatasoft’s API V1. We can see just from looking at it that it is in strong compliance with 18F Universal Design Guidelines. The first step in compliance is that we are using secure hypertext transfer protocol. Note that the standard uses HTTPS:// as the preferred method of access. Second step is that we are using human readable and persistent URLS. data.townofcary.org/api/records will always be be the root of the API for the Town of Cary’s open data portal. Depending on the future, the Town of Cary can keep this URL for use with any system that allows for this URL. Next, the query itself is human readable.

So, /search/?dataset=wake-county-restaurants&q=WAKE+FOREST is the string I am using to filter all Wake County restaurants that are located in Wake Forest where I live. Other APIs use randomly generated alphanumeric fields as placeholders. This is not very universal in API design. If I wanted to share the query with someone else and my string had random unreadable characters, I would need extra documentation to explain the context of the query. Using plain text and human readable strings like our Town of Cary query also means the ambient findability is higher. This is what is strongly suggested by the universal design standard from 18F.

What do you think I pulled out of the dataset when I ran this query?

Let’s break it down. You have to know a little bit about what you are looking for to know what your options are. In this case, with the Town of Cary’s open data portal, I looked in the catalog to see the names of the data sets. I found one I was interested in. I also read a bit of the documentation so I knew how to “GET” JSON data back from my query. /api/dataset/1.0 is how I invoke API V1 for an OpenDataSoft portal.

That can be found here on the API documentation.

The instructions do not require any programming experience. I know. I stopped being a web developer a long time ago before APIs were commonplace. All I have to do is build a URL with the name of the dataset I want to query, wake-county-restaurants, and then decide if I want the whole thing or a subset. I decided I only wanted data from Wake Forest so I added a filter on the end &q=WAKE+FOREST. Note: I am only showing 1 of 207 records. By using the facet &q=WAKE+FOREST. I whittled down the results from 3282 to 207:

I am showing a record that is both human readable, the Wake Forest BP restaurant with its location facility type, etc. And I am also showing a piece of data that is standard machine readable JSON that can be easily reused in another application.

Proper JSON Objects

I noticed that some url strings published by 18F use YML format rather than formatted JSON but it is still a JSON object. JSON is the emerging standard though not just by 18F but also by OpenAPI through Swagger. This is why all data sent through V1 and V2 of the Opendatasoft API is through formatted JSON and a JSON Object. Sometimes an Array is used but data can be retrieved as an Object.

A Final Note: "Always Use HTTPS://"

Both the General Services Administration and 18F think that out of the box HTTPS:// is better than trying to retrofit a security layer on HTTP://.

The following is not an exhaustive list but is it is comprehensive enough and aligns with both the OpenAPI Standard and the way that Opendatasoft handles:

- Security: The contents of the request are encrypted across the Internet.

- Authenticity: A stronger guarantee that a client is communicating with the real API.

- Privacy: Enhanced privacy for apps and users using the API. HTTP headers and query string parameters (among other things) will be encrypted.

- Compatibility: Broader client-side compatibility. For CORS requests to the API to work on.

- HTTPS websites: to not be blocked as mixed content — those requests must be over HTTPS.

- HTTPS should be configured using modern best practices, including ciphers that support forward secrecy, and HTTP Strict Transport Security.

What's in the Future?

Mark Headd works for 18F. He is also a writer and pundit on topics like open data and open data portals. Mark Headd’s “I Hate Open Data Portals” is a good read and in spite of all of the progress made on the open data API front, he makes some good points. Most of the article was about open data portals as a whole. The point he makes that I want to restate is he is basically right, we have an obligation to make data as easy to access as GitHub does. That being said one can access the entire data catalog of an Opendatasoft portal. However, per Mark’s point, we do not yet have versioning.

Continuous Improvement

I have spent some time using Opendatasoft’s V1 (production) API as a way to explain how APIs work and how we map to the OpenAPI standard and to what 18F defines as Open Data API best practices. Opendatasoft takes notice of standards like those at 18F. Our competitors, like the folks at Opendatasoft, have come a long way with making our APIs useful, well documented and easy to use.

We are still on the journey.